Properly building images for Istio deployment — Installing Istio Part 2

In a previous blog I looked at how to install istio using helm on kubernetes with a specific application

https://www.beyondvirtual.io/blog/istio-helm-CPKS-1-bs/

In addition I noted how Istio uses envoy, injected in each pod, to help manage the application.

Using Envoy in the application also requires that the containers be properly be created in order to avoid “race” condition. I’ll describe the “race” condition between the application and envoy in this blog, and talk about how to fix it.

First lets’ review what Envoy is

Envoy proxy is the “heart” of Istio. Without it a majority of the features and capabilities would not be possible.

From the envoy.io site:

Originally built at Lyft, Envoy is a high performance C++ distributed proxy designed for single services and applications, as well as a communication bus and “universal data plane” designed for large microservice “service mesh” architectures. Built on the learnings of solutions such as NGINX, HAProxy, hardware load balancers, and cloud load balancers, Envoy runs alongside every application and abstracts the network by providing common features in a platform-agnostic manner. When all service traffic in an infrastructure flows via an Envoy mesh, it becomes easy to visualize problem areas via consistent observability, tune overall performance, and add substrate features in a single place.

What are the capabilities?

- Dynamic service discovery

- Load balancing

- TLS termination

- HTTP/2 and gRPC proxies

- Circuit breakers

- Health checks

- Staged rollouts with %-based traffic split

- Fault injection

- Rich metrics

How is envoy deployed in the application?

Using my standard fitcycle app (https://github.com/bshetti/container-fitcycle), I can see where envoy fits in the application’s deployment.

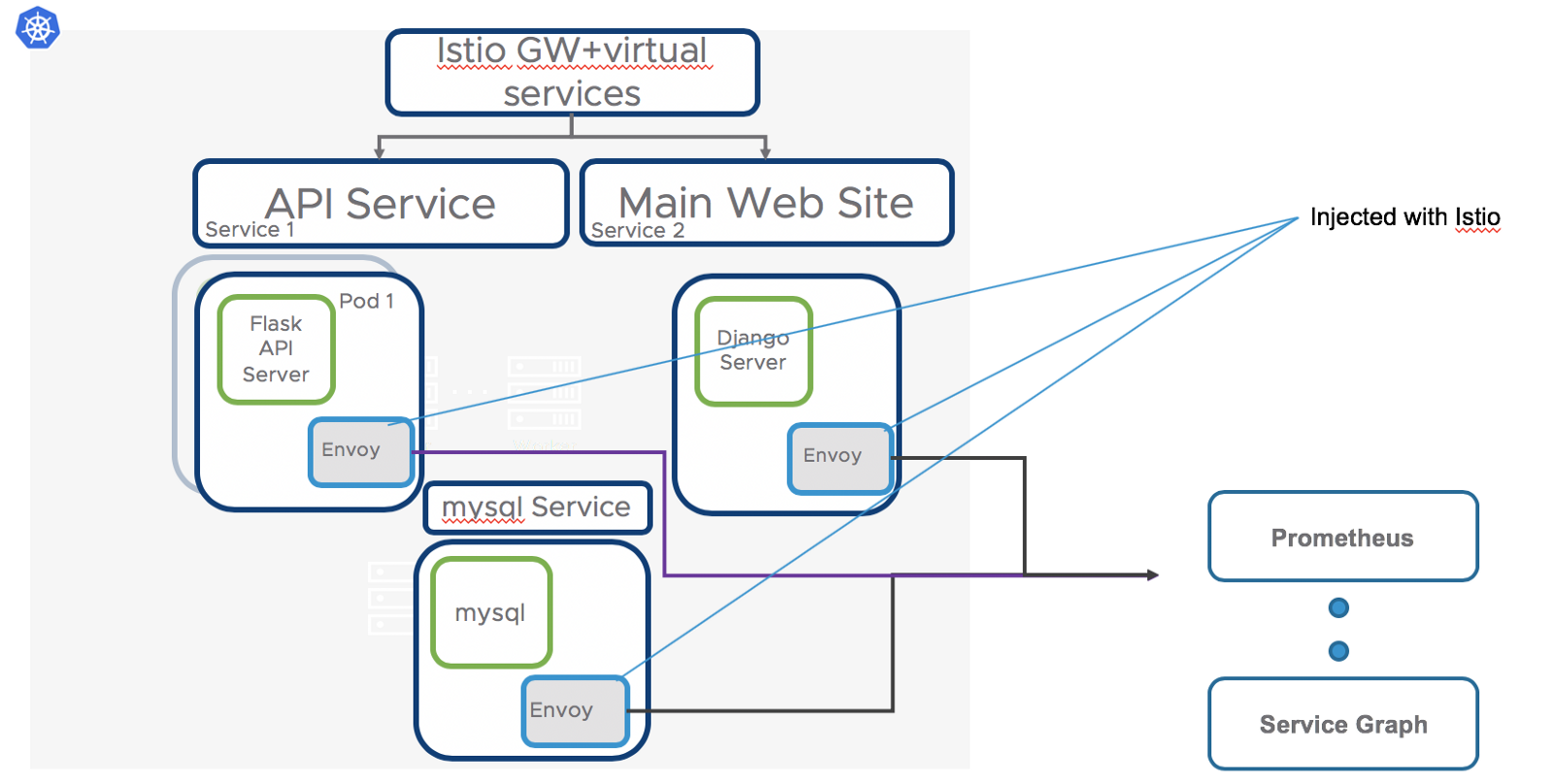

Above I see the application (fitcycle) deployed in a Kubernetes cluster with several pods

- 2 API replicas supporting the “API Service” — each pod has envoy proxy injected

- django pod with envoy proxy injected

- mysql pod with envoy proxy injected

Once envoy proxy is deployed it enables us to push down traffic management policies, support metrics to prometheus, push traffic and interconnectivity data to service graph, etc.

So what are the potential issues with Envoy and the application? Timing or “race” condition is one of them.

Envoy vs the application — timing

When injecting envoy into an application, envoy gets injected into the pod along with other sidecars. Here is a small diagram of the configuration of the web-server pod from the fitcycle application I have been using in my blogs.

In this example I have three containers running in the web-server pod

- django (web framework using python)

- statsd collector — telegraf for wavefront stats

- envoy injected via Istio automatically or manually.

Django when initializing will look for its database which can be whatever you configure it in the standard Django settings.py file. In our configuration I configure mysql.

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'prospect',

'USER': mysqlId,

'PASSWORD': mysqlPassword,

'HOST': mysqlServer, # Or an IP Address that your DB is hosted on

}

The mysql-server I are pointing to is the other pod running the mysql container. (See containerized fitcycle application image earlier in this blog)

Service bring up ordering

Kubernetes normally will allow each pod to come up independently in any order because Kubernetes will restart the dependent pods as needed. This is a big benefit of Kubernetes. Hence in our application, I can order the bring up in any order:

- api-server, mysql, web-server

- web-server, api-server, mysql

- mysql, web-server, api-server ←ideal order in a VM based installation

- api-server, web-server, mysql

- etc

Regardless of this order, the application will come up. This variability is applicable when I have a simple app like our LAMP stack fitcycle application.

However in most cases, ordering is important, and the ideal order for our application is:

- mysql, web-server, api-server

- mysql, api-server, web-server

mysql is the main service required for both the api-server, and web-server, hence it needs to be installed first.

Using envoy with istio, this order also applies, however even with the order, the application deployment can fail.

In particular, a pod deployment failure will occur because envoy will restrict access to other services.

Waiting for Envoy sidecar in a pod

In our example, I brought up the application using envoy and everything looked as if it was working

ubuntu@ip-172-31-7-100:~/container-fitcycle/wavefront/django$ kubectl get pods

NAME READY STATUS RESTARTS AGE

api-server-6d7df8c47d-4dhkw 3/3 Running 15 88d

api-server-6d7df8c47d-dtj2t 3/3 Running 15 88d

api-server-6d7df8c47d-qwvcz 3/3 Running 14 88d

fitcycle-mysql-5cbbd87ff6-mvgt2 3/3 Running 0 88d

mobile-server-79bb4b756-b4t94 2/2 Running 0 90d

mobile-server-79bb4b756-hmcms 2/2 Running 0 88d

wavefront-proxy-5b658 1/1 Running 0 88d

web-server-6d9fd94c7f-kgwrr 3/3 Running 0 37s

But I could not get access to the main web page

When I looked at the log of our web-server we found the following:

How is this possible when the mysql service is up and running? Several possible issues:

- django doesn’t have the right user/password (not possible since I used the same env variables on other deployments)

- envoy rules need to be adjusted to allow for communication between django and mysql (not possible because it allows for communication between pods in the same namespace as default. I never changed the configuration)

After some research what I found is the following:

- envoy locks out all communication to the outside from the POD until it is up.

- django runs to completion because it CANNOT connect to mysql because its initialization is faster than envoy in the POD

In essence, django needs to WAIT for envoy to come up before testing and using the connection to mysql.

This is the timing issue or “race” condition that needs to be avoided in applications.

The workaround

The fix requires the django container to be created with a “wait” script in its entrypoint.sh script.

Here is what I inserted into the docker container entrypoint.sh file:

The wait loop, will ensure that Django waits for mysql to be accessible before the initialized the container and runs the final docker commands.

With this in place, I reran the bring up again with istio and had envoy injected into the pod. The Django logs now looked normal.

ubuntu@ip-172-31-7-100:~/container-fitcycle/wavefront/django$ kubectl logs web-server-65d48b5d76-r44fg --container web-server

ERROR 2003 (HY000): Can't connect to MySQL server on 'fitcycle-mysql' (111 "Connection refused")

ERROR 2003 (HY000): Can't connect to MySQL server on 'fitcycle-mysql' (111 "Connection refused")

ERROR 2003 (HY000): Can't connect to MySQL server on 'fitcycle-mysql' (111 "Connection refused")

1

1

Apply database migrations

2018-11-17 18:05:03,282 django.db.backends DEBUG (0.000) SET SQL_AUTO_IS_NULL = 0; args=None

2018-11-17 18:05:03,343 django.db.backends DEBUG (0.000) SET SQL_AUTO_IS_NULL = 0; args=None

2018-11-17 18:05:03,344 django.db.backends DEBUG (0.001) SHOW FULL TABLES; args=None

2018-11-17 18:05:03,346 django.db.backends DEBUG (0.001) SELECT `django_migrations`.`app`, `django_migrations`.`name` FROM `django_migrations`; args=()

2018-11-17 18:05:05,366 django.db.backends DEBUG (0.000) SET SQL_AUTO_IS_NULL = 0; args=None

2018-11-17 18:05:05,474 django.db.backends DEBUG (0.006) SET SQL_AUTO_IS_NULL = 0; args=None

2018-11-17 18:05:05,475 django.db.backends DEBUG (0.001) SHOW FULL TABLES; args=None

2018-11-17 18:05:05,477 django.db.backends DEBUG (0.001) SELECT `django_migrations`.`app`, `django_migrations`.`name` FROM `django_migrations`; args=()

Highlighted in bold are the number of times the container waited before Envoy finally came up, and then opened the connection to mysql.

The website worked as normal.

Summary

Its best to work through the application architecture and ensure specific services are properly configured and the docker images are built appropriately. This will avoid similar timing/”race” issues we saw with Envoy in out deployment.