Easy as pie - Connecting your application to Cosmos DB

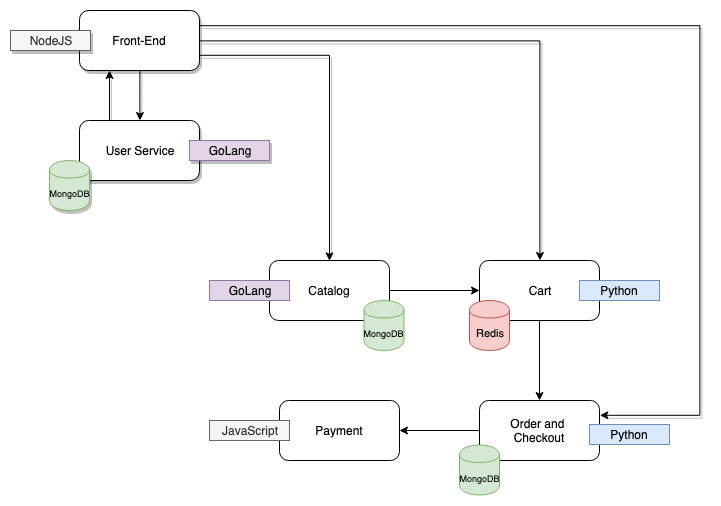

As we’ve discussed in multiple blogs on this site, our application of choice has always been the AcmeShop App. It has multiple services, some of which are also DBs, redis and mongo.

We built this app to show case multiple services, and to keep the application portable. Hence the inclusion of Mongo and Redis in the app as a Kubernetes service. While this architecture is fairly typical for test/dev environments, its not typical with regards to production environments. Most production implementations will NOT use container based databases. Several reasons:

- Management of DBs is generally not a skill associated with developers, devops, and/or the cloud admins.

- Production use of DBs is generally setup in a highly secure, and highly available environment

- Scale is a big DB requirement, specifically when it comes to application growth.

- Portability - it allows the frontend and processing “bits” of the app to be deployed on any cloud, while ensuring there is a secure singular location for the application’s state information. Its generally hard to move DBs around.

While implementing a Database as a service in a container on Kubernetes is not wrong, the issues cited above will drive implementations of the database on prem, where its setup as a secure, highly available, and scalable service.

In this blog we will show how the AcmeShop App can be configured to connect to a highly scalable, secure and available cloud service vs using MongoDB. There are a lot of options that are available:

- DocumentDB from AWS

- MongoDB Atlas for AWS, Azure, and GCP (from MongoDB)

- CosmosDB from Azure

- Google Cloud Datastore

Any of these services is a alternative to deploying and managing your own DB service as part of the application. These services will remove any need for DB management expertise. All these services are highly available, scalable, and secure.

Hence you just configure, point your application to the DB service, and use.

In this blog, I will quickly walk through how to connect AcmeShop App to Azure Cosmos DB which is a viable alternative to MongoDB.

One reason we picked CosmosDB from Azure vs DocumentDB from AWS, is that CosmosDB is more of a true service vs DocumentDB is more of a “managed service”. Here’s why:

- DocumentDB must be deployed in a specific VPC and the application generally resides on the same VPC. While this enables a fairly secure deployment, its also fairly rigid. Most needs will be to have some connectivity to a DB running outside the VPC.

- CosmosDB on the other hand is a simple deployment process from the UI and then connecting the service through a secure username/password provided by Azure. This allows for access from any end point.

Environment

To highlight this configuration, we used the following components:

- Order service from AcmeShop App - It’s written in Python and uses py-mongo 3.7.2 to connect to MongoDB

- AKS Cluster where I deployed the modified code

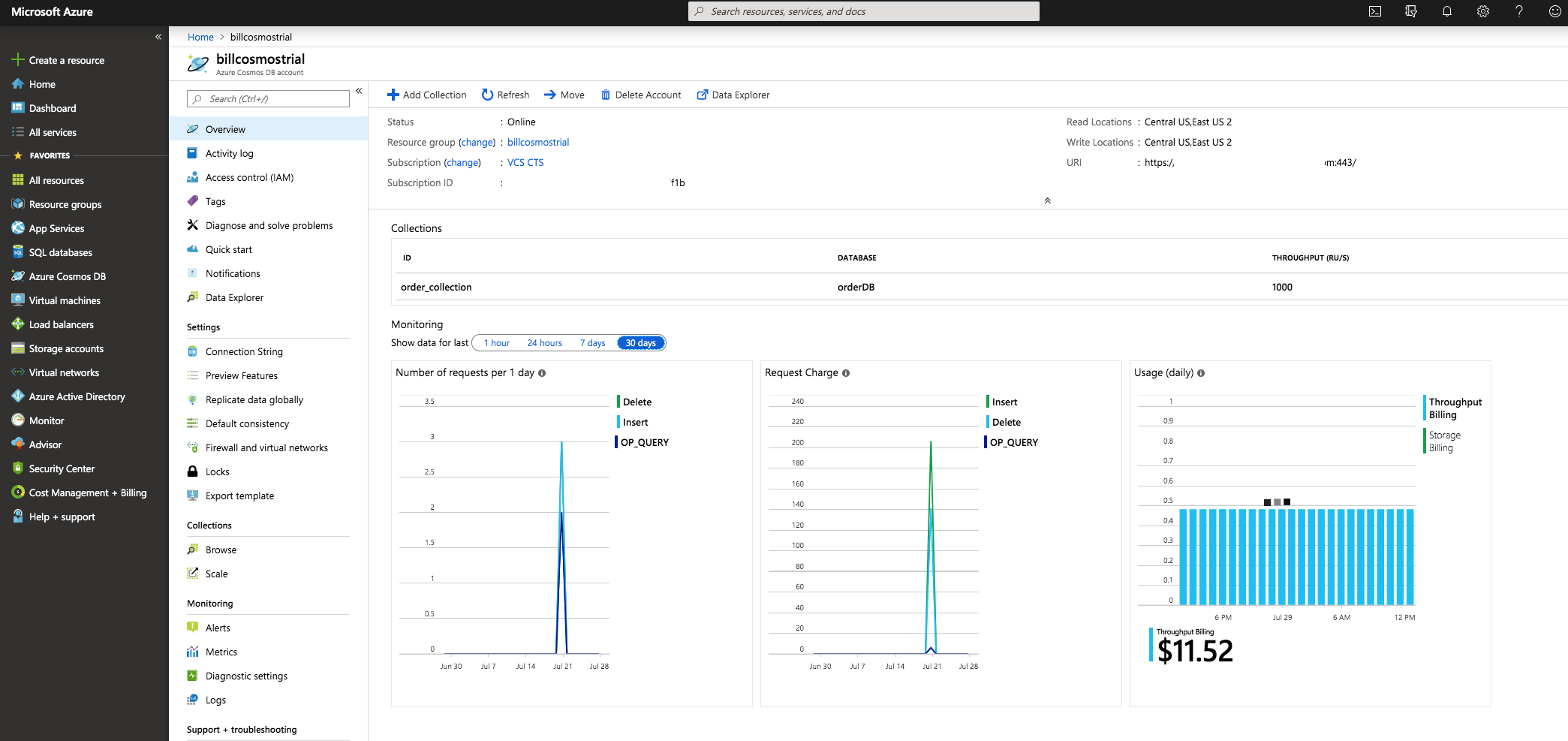

- CosmosDB instance - the alternative to MongoDB in the application

Configuration

The configuration is fairly simple and straight forward. There are three parts:

- Azure CosmosDB

- Modify python code to handle connecting to CosmosDB

- Docker and Kubernetes modifications (may not be needed - depends on how you setup your manifests)

Azure CosmosDB setup

Azure configuration is well documented, essentially follow the steps found in Azure documentation.

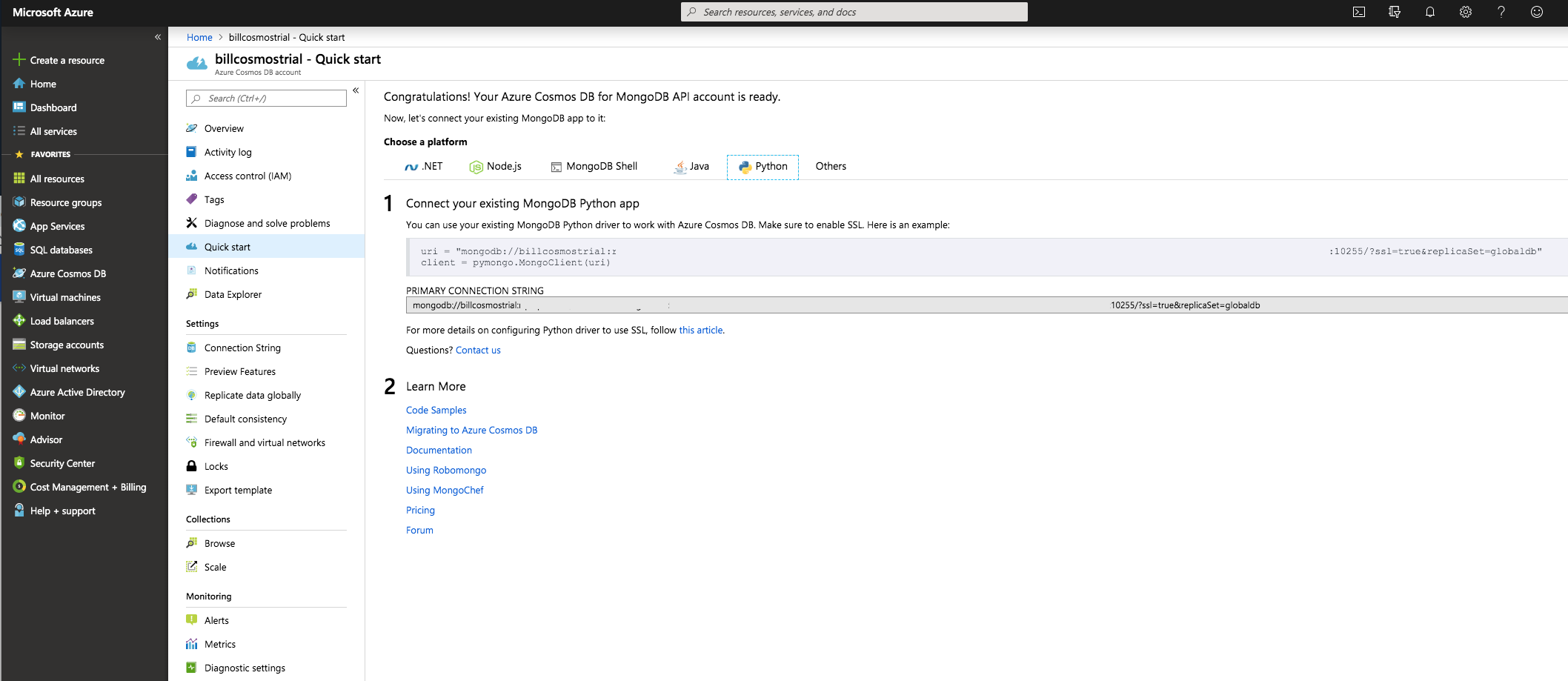

In this configuration we followed the steps outlined in the Python Quickstart

NOTE: we stopped at Add a container because we are using python to create, access, and modify the data in CosmosDB.

Once you have the CosmosDB configured, you simply go to the “quickstart” section and select “Python” section:

The code to insert into python is there. Its a simple cut and paste.

Modifications to application (in Python)

Once you have the URI (with the username and password) its a simple conversion to the right parameters to make the mongo call on python.

Here is the code we currently have implemented in order.py

import pymongo

from pymongo import MongoClient

from pymongo import errors as mongoerrors

try:

client=MongoClient(host=mongohost, port=int(mongoport), username=mongouser, password=mongopassword)

app.logger.info('initiated mongo connection %s', client)

db=client.orderDB

orders=db.order_collection

orders.delete_many({})

except Exception as ex:

app.logger.error('Error for mongo connection %s', ex)

exit('Failed to connect to mongo, terminating')

#Generates a random string for order id

def randomString(stringLength=15):

letters = string.ascii_lowercase

return ''.join(random.choice(letters) for i in range(stringLength))

Here is the URI from Azure’s CosmosDB portal

uri = "mongodb://billcosmostrial:XXX@YYYY.documents.azure.com:10255/?ssl=true&replicaSet=globaldb"

Here is how to ensure these are mapped appropriately in the python code:

mongouser=billcosmostrial

mongohost=YYYY.documents.azure.com

mongoport=10255

mongopassword=XXX

We generally have these passed in as ENV variables to ensure we can modify them for whatever endpoint we are using (Mongo, CosmosDB, DocumentDB, etc)

from os import environ

if environ.get('ORDER_DB_USERNAME') is not None:

if os.environ['ORDER_DB_USERNAME'] != "":

mongouser=os.environ['ORDER_DB_USERNAME']

else:

mongouser=''

else:

mongouser=''

if environ.get('ORDER_DB_HOST') is not None:

if os.environ['ORDER_DB_HOST'] != "":

mongohost=os.environ['ORDER_DB_HOST']

else:

mongohost='localhost'

else:

mongohost='localhost'

if environ.get('ORDER_DB_PORT') is not None:

if os.environ['ORDER_DB_PORT'] != "":

mongoport=os.environ['ORDER_DB_PORT']

else:

mongoport=27017

else:

mongoport=27017

if environ.get('ORDER_DB_PASSWORD') is not None:

if os.environ['ORDER_DB_PASSWORD'] != "":

mongopassword=os.environ['ORDER_DB_PASSWORD']

else:

mongopassword=''

else:

mongopassword=''

Hence:

ORDER_DB_HOST

ORDER_DB_USERNAME

ORDER_DB_PORT

ORDER_DB_PASSWORD

are all passed in from Kubernetes manifest.

Kubernetes config

When running the order service in K8S we simply use the following commands to setup secure variables with secrets:

kubectl create secret generic order-mongo-pass --from-literal=password=XXX

kubectl create secret generic order-mongo-user --from-literal=user=billcosmostrial

kubectl create secret generic order-mongo-host --from-literal=host=YYYY.documents.azure.com

kubectl create secret generic order-mongo-port --from-literal=port=10255

Modify the K8S manifest to take these values:

- image: gcr.io/vmwarecloudadvocacy/acmeshop-order:1.0.1

name: order

env:

- name: ORDER_DB_HOST

valueFrom:

secretKeyRef:

name: order-mongo-host

key: host

- name: ORDER_DB_PASSWORD

valueFrom:

secretKeyRef:

name: order-mongo-pass

key: port

- name: ORDER_DB_PORT

valueFrom:

secretKeyRef:

name: order-mongo-port

key: password

- name: ORDER_DB_USERNAME

valueFrom:

secretKeyRef:

name: order-mongo-user

key: user

- name: ORDER_PORT

value: '6000'

- name: PAYMENT_PORT

value: '9000'

- name: PAYMENT_HOST

value: 'payment'

NOTE: With this manifest we could have run the application on AWS EKS, on-prem PKS, Google GKE, etc. The data stays in Azure’s highly available, scalable, secure CosmosDB. We now have flexibility.

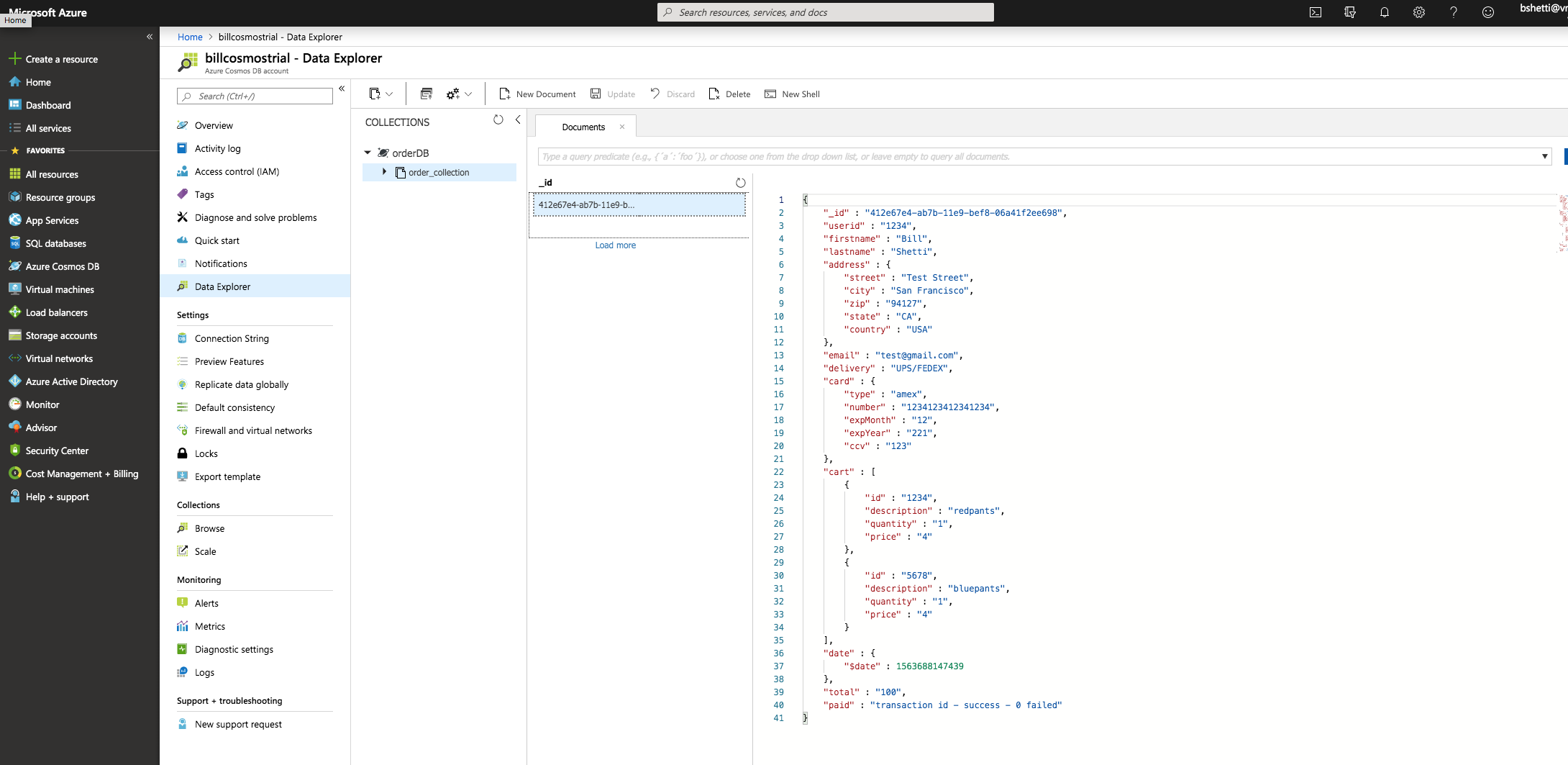

Viewing data in CosmosDB

Once we run the K8S manifest with the secrets added in, the deployment will connect to CosmosDB. Azure allows us to also view the data in the database on the UI.

While we can use the mongo CLI to interrogate the DB, we can also use the Azure Cosmos DB portal to view what is in the DB.

CONCLUSION

Using a Cloud based database service is generally the best avenue vs self managed databases when it comes to production deployments. This will help

- Reduce your management expertise for DBs - almost down to zero

- Ensure its highly available and secure - as AWS, Azure and GCP all manage data far better than most enterprises

- Automatically scale the database as needed.

- Allow easy portability of the application - especially if you have the application running on Kubernetes.

Hopefully this provided you with insight and confidence to run with a cloud service DB vs relying on your own.